Big Data development company

Turn your data into a competitive advantage!

We are professional developers who build custom Big Data solutions to transform raw unstructured data into a strategic asset for the business. Our Big Data expertise enables us to develop Big Data solutions of unmatched quality, distinguished by four characteristics.

Big Data services you need

The software development length and budget are two criteria that affect the choice of the engagement model.

Big Data technologies

These are designed to handle the vast and complex datasets of the modern age. They are adept at processing high-volume, high-velocity, and high-variety information assets that demand cost-effective, innovative forms of information processing for enhanced insight and decision-making. Some cases of using this technology are:

- social media, patient data analysis;

- personalized content recommendations for streaming platforms;

- predictive analytics for customer behavior in eCommerce; credit risks evaluation;

- machine learning in autonomous vehicles and targeted advertising;

- the Internet of Things for predictive maintenance in manufacturing;

- unusual transaction detection and network traffic analysis.

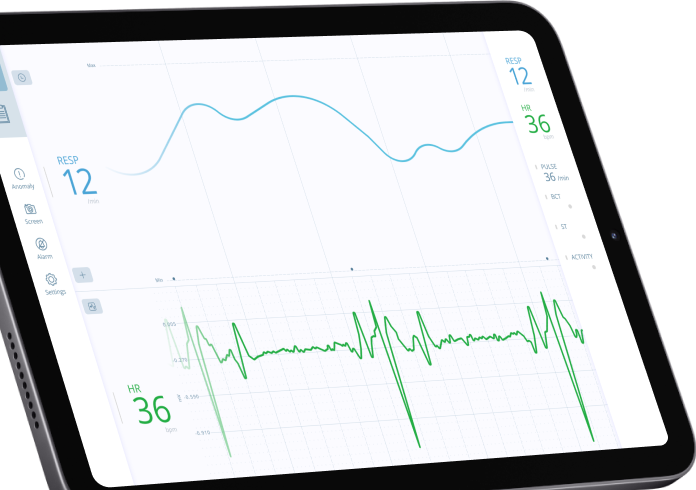

Traditional technologies

Traditional technologies are centered on the processing and analysis of structured data, such as tabular datasets found in finance, customer relationship management, resource planning, and accounting. Businesses use structured data in their daily operations to inform strategic and operational decisions. Businesses deal with this technology in:

- daily banking transactions;

- data reporting and BI;

- ERP data management;

- CRM data management;

- budgeting and accounting;

- inventory and order management.

Big Data services we offer

We provide end-to-end Big Data software development services, from strategy to deployment.

Data engineering

Big Data analytics & BI

Data integration

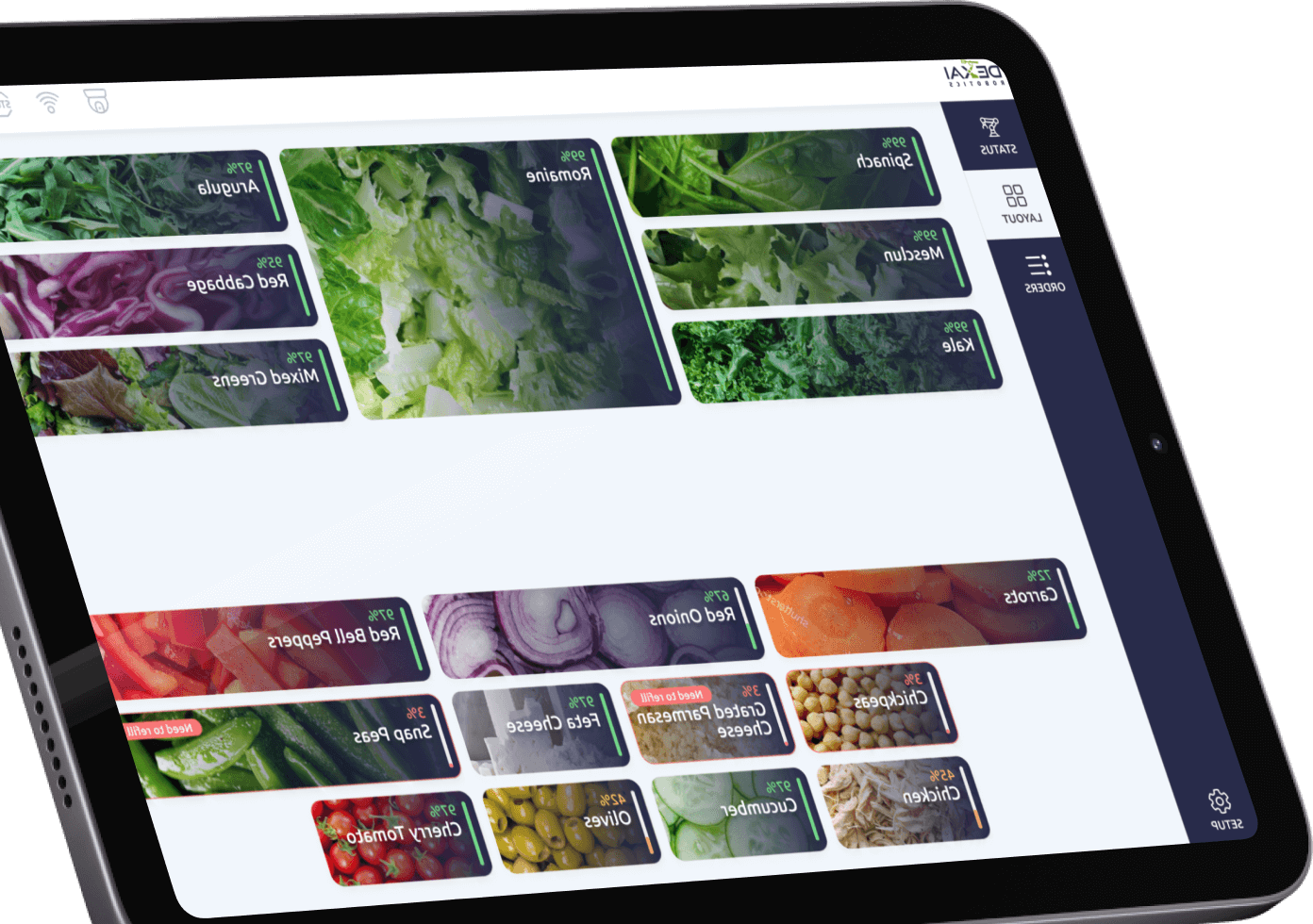

Data visualization

Predictive analytics & ML

Big Data consulting

Schedule a Free Big Data Consultation

Let’s talk about your data goals and how to turn raw information into business value.

Our Big Data development competencies

Below are the core areas where our team consistently delivers value.

Scalable architecture design

Big Data systems grow fast in volume, complexity, and number of users. Our development team builds scalable data platforms designed to process high volumes of data, effectively handle longer queries, and support multiple additional integrations. We apply component modularity, distributed processing, elastic storage, load balancing, and decoupled services to ensure consistent platform performance under load.

ETL/ELT development & automation

Our talented team develops automated, fault-tolerant ETL and ELT pipelines that extract, transform, and load data from multiple sources into unified storage layers. We implement best-in-class orchestration frameworks like Apache Airflow and dbt to schedule, monitor, and version data workflows. These pipelines ensure timely, governed, and reproducible data movement, laying the groundwork for reliable reporting and downstream analytics.

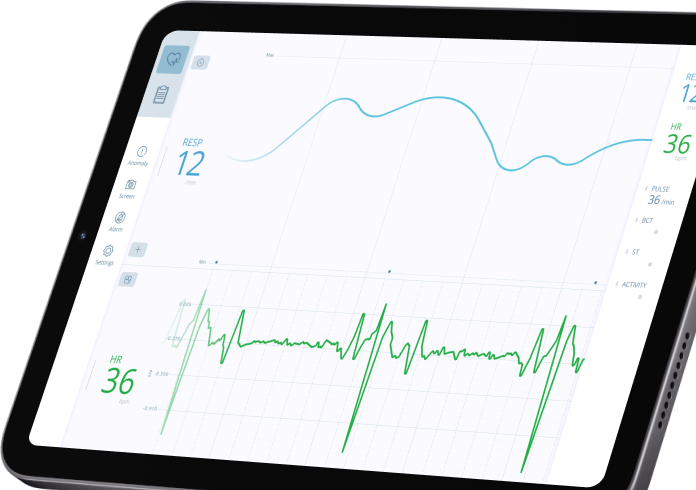

Real-time data processing

We develop Big Data solutions for systems built around real-time data processing and requiring immediate alerts and real-time dashboard updates, such as systems that detect events, anomalies, trends, etc. We use Apache Kafka, Flink, and AWS Kinesis to ensure real-time data availability and updates, building systems for fraud detection, supply chain visibility, IoT telemetry, and other latency-sensitive use cases.

Data governance & compliance

Comprehensive data governance implies using robust practices across the whole data lifecycle. From our site, we build build robust ingestion pipelines using Kafka and NiFi, we validate, deduplicate, clean, and tag with metadata all incoming data, we design highly secure tiered storage (hot/warm/cold) to reduce cost and latency, we enforce data quality rules (type checks, null handling, threshold alerts) using tools like Great Expectations or dbt tests, and more. Our solutions support compliance alignment with GDPR, HIPAA, SOC 2, and other frameworks.

Advanced analytics & ML integration

We operationalize machine learning and advanced analytics within your data infrastructure. Leveraging libraries such as TensorFlow, PyTorch, and scikit-learn, we develop predictive models that segment users, forecast demand, and detect anomalies. These models are trained on real business data, deployed into pipelines, and monitored for accuracy and performance over time.

Multi-source data integration

Our engineers unify disparate datasets from CRMs, ERPs, IoT devices, third-party APIs, and raw files into a single coherent platform. We design connectors and streaming logic that reconcile formats, schemas, and data quality issues at scale. This comprehensive integration unlocks end-to-end visibility across operations and eliminates costly data silos.

Visualization-ready output

We tailor data output for usability by different business stakeholders – ensuring that processed datasets are optimized for BI tools or custom dashboards. Whether through Tableau, Power BI, or bespoke visualizations, we present data in a form that is both technically sound and immediately actionable. As a result, teams at all levels can confidently explore, report, and act on insights.

Scalable architecture design

Big Data systems grow fast in volume, complexity, and number of users. Our development team builds scalable data platforms designed to process high volumes of data, effectively handle longer queries, and support multiple additional integrations. We apply component modularity, distributed processing, elastic storage, load balancing, and decoupled services to ensure consistent platform performance under load.

ETL/ELT development & automation

Our talented team develops automated, fault-tolerant ETL and ELT pipelines that extract, transform, and load data from multiple sources into unified storage layers. We implement best-in-class orchestration frameworks like Apache Airflow and dbt to schedule, monitor, and version data workflows. These pipelines ensure timely, governed, and reproducible data movement, laying the groundwork for reliable reporting and downstream analytics.

Real-time data processing

We develop Big Data solutions for systems built around real-time data processing and requiring immediate alerts and real-time dashboard updates, such as systems that detect events, anomalies, trends, etc. We use Apache Kafka, Flink, and AWS Kinesis to ensure real-time data availability and updates, building systems for fraud detection, supply chain visibility, IoT telemetry, and other latency-sensitive use cases.

Data governance & compliance

Comprehensive data governance implies using robust practices across the whole data lifecycle. From our site, we build build robust ingestion pipelines using Kafka and NiFi, we validate, deduplicate, clean, and tag with metadata all incoming data, we design highly secure tiered storage (hot/warm/cold) to reduce cost and latency, we enforce data quality rules (type checks, null handling, threshold alerts) using tools like Great Expectations or dbt tests, and more. Our solutions support compliance alignment with GDPR, HIPAA, SOC 2, and other frameworks.

Advanced analytics & ML integration

We operationalize machine learning and advanced analytics within your data infrastructure. Leveraging libraries such as TensorFlow, PyTorch, and scikit-learn, we develop predictive models that segment users, forecast demand, and detect anomalies. These models are trained on real business data, deployed into pipelines, and monitored for accuracy and performance over time.

Multi-source data integration

Our engineers unify disparate datasets from CRMs, ERPs, IoT devices, third-party APIs, and raw files into a single coherent platform. We design connectors and streaming logic that reconcile formats, schemas, and data quality issues at scale. This comprehensive integration unlocks end-to-end visibility across operations and eliminates costly data silos.

Visualization-ready output

We tailor data output for usability by different business stakeholders – ensuring that processed datasets are optimized for BI tools or custom dashboards. Whether through Tableau, Power BI, or bespoke visualizations, we present data in a form that is both technically sound and immediately actionable. As a result, teams at all levels can confidently explore, report, and act on insights.

Industries we empower with Big Data

Our Big Data solutions drive success across multiple industries. We tailor each solution to industry-specific needs and challenges:

Finance & FinTech

Real-time fraud detection, risk analytics, algorithmic trading platforms, and customer credit scoring systems. We help financial institutions harness Big Data to boost security and enable smarter investments.

Healthcare

Patient data analytics, predictive healthcare outcomes, IoT medical device data processing, and clinical research data management. Our solutions improve patient care and operational efficiency in health services.

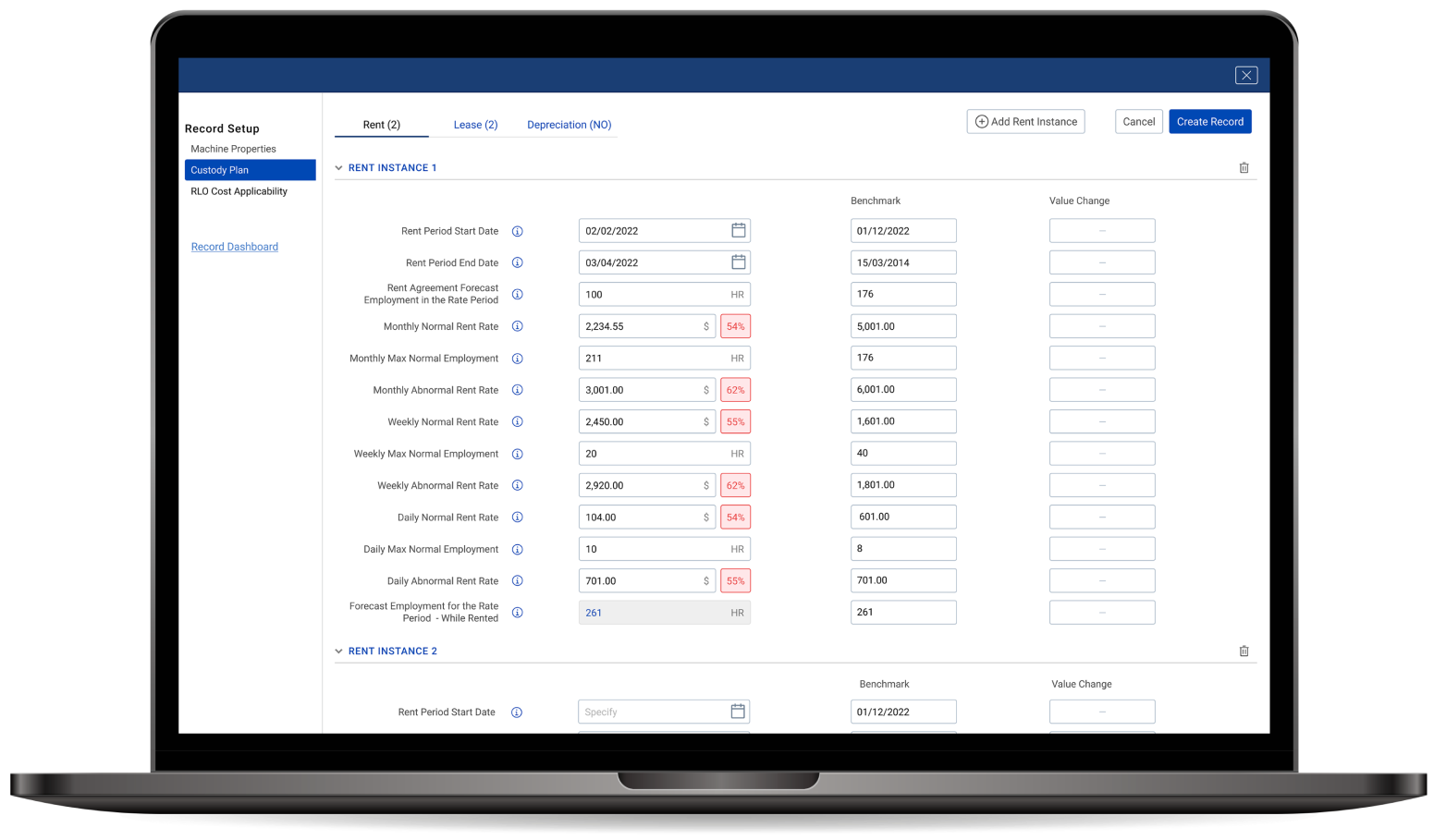

Retail & E-Commerce

Customer behavior analysis, personalized recommendation engines, demand forecasting, and inventory optimization. We enable retailers to boost sales and customer engagement with data-driven insights.

Manufacturing

IoT-driven predictive maintenance, quality control analytics, supply chain and production optimization. We help manufacturers reduce downtime and improve productivity by leveraging sensor and operational data.

Advertising & Media

Ad campaign performance analytics, audience segmentation, real-time bidding systems, and content personalization. Our Big Data platforms empower AdTech and Media companies to maximize ROI on advertising spend and user engagement.

Logistics & Transportation

Route optimization, fleet management analytics, demand and supply forecasting. We build data solutions that streamline logistics operations, cut costs, and improve delivery efficiency.

Request a Project Estimate

Receive a detailed estimate for building your Big Data platform — no commitment required.

Technologies we work with

Databases (relational & NoSQL)

- PostgreSQL

- MySQL

- Microsoft SQL Server

- MongoDB

- Redis

- Cassandra

- AWS DynamoDB

- Apache HBase

- ClickHouse

- Neo4j

Data warehousing & OLAP

- Amazon Redshift

- Google BigQuery

- Snowflake

- ClickHouse

- Cloudera

- DataStax

Streaming & real-time processing

- Apache Kafka

- Apache Kudu

- AWS Kinesis

- Google Pub/Sub

- Apache NiFi

- MQTT / WebSockets

Monitoring & metrics

- InfluxDB

- Chronograf

- Graphite

- Prometheus

- Grafana

Analytics & business intelligence

- Google Analytics

- Power BI

- Tableau

- Looker

- Superset

- Metabase

- Grafana

In-memory caching & acceleration

- Redis

- Memcached

What it takes to build a Data-powered app

Why Big Data matters for businesses

Because decisions based on guesswork are expensive

Every business makes thousands of choices daily: what to sell, where to allocate budget, which Clients to prioritize, which service to promote, and more. Big Data development services help convert your internal and external data into decision-grade insights. That eliminates guesswork because you get knowledge rather than opinions.

Because real-time wins

Static reports are dead. By the time traditional BI shows a sales decline, the damage is done. Big Data development services give businesses streaming analytics, allowing them to react instantly to customer behavior, market changes, or system anomalies. Speed becomes your weapon.

Because your competitors already use them

The top companies in every industry, like Amazon, Netflix, or Tesla, don’t guess. They use predictive models, recommendation engines, demand forecasting, and user segmentation powered by Big Data. If you don’t, you’re playing a slower, blinder game.

Because the data flood is only getting bigger

IoT sensors, CRM logs, transaction systems, web tracking – the average company’s data volume grows exponentially. Without a proper system to collect, clean, store, and analyze it, you’re paying to lose information. Big Data development services are not optional anymore — they are infrastructure.

Because personalization = revenue

Today’s users expect tailored offers, real-time feedback, and smart recommendations. Big Data development services enable hyper-personalized experiences, increasing conversion rates, retention, and lifetime value.

Because inefficiency hides in plain sight

Poorly performing ads, inventory pile-ups, machine breakdowns – they often leave subtle traces in data long before they cause real damage. Big Data systems help surface these signals early through anomaly detection, pattern recognition, and root cause analysis.

Because growth needs a foundation

Startups scale fast. Enterprises optimize continuously. In both cases, systems that process, analyze, and visualize large-scale data in real time are the backbone of sustainable growth. Our big data development services build the foundation for this growth.

Because decisions based on guesswork are expensive

Every business makes thousands of choices daily: what to sell, where to allocate budget, which Clients to prioritize, which service to promote, and more. Big Data development services help convert your internal and external data into decision-grade insights. That eliminates guesswork because you get knowledge rather than opinions.

Because real-time wins

Static reports are dead. By the time traditional BI shows a sales decline, the damage is done. Big Data development services give businesses streaming analytics, allowing them to react instantly to customer behavior, market changes, or system anomalies. Speed becomes your weapon.

Because your competitors already use them

The top companies in every industry, like Amazon, Netflix, or Tesla, don’t guess. They use predictive models, recommendation engines, demand forecasting, and user segmentation powered by Big Data. If you don’t, you’re playing a slower, blinder game.

Because the data flood is only getting bigger

IoT sensors, CRM logs, transaction systems, web tracking – the average company’s data volume grows exponentially. Without a proper system to collect, clean, store, and analyze it, you’re paying to lose information. Big Data development services are not optional anymore — they are infrastructure.

Because personalization = revenue

Today’s users expect tailored offers, real-time feedback, and smart recommendations. Big Data development services enable hyper-personalized experiences, increasing conversion rates, retention, and lifetime value.

Because inefficiency hides in plain sight

Poorly performing ads, inventory pile-ups, machine breakdowns – they often leave subtle traces in data long before they cause real damage. Big Data systems help surface these signals early through anomaly detection, pattern recognition, and root cause analysis.

Because growth needs a foundation

Startups scale fast. Enterprises optimize continuously. In both cases, systems that process, analyze, and visualize large-scale data in real time are the backbone of sustainable growth. Our big data development services build the foundation for this growth.

Turn Big Data into Big Results

We help you extract insights, optimize operations, and innovate faster with end-to-end data systems.

Benefits of our Big Data solutions

Our Big Data systems are built to deliver measurable business impact from day one. Here’s what you can expect from our Big Data solutions.

Cost efficiency by design

We optimize infrastructure at every level: storage, processing, and data transfer. This results in scalable solutions without bloated cloud bills. We use the right mix of cloud-native tools, open-source tech, and smart architecture to cut recurring costs by up to 40%.

High Data quality

Automated cleansing, validation, and governance ensure your decisions rely on consistent, trustworthy data, not noise. This reduces the risk of false insights and improves confidence across all data-driven operations.

Future-proof architecture

We build with scale in mind: distributed systems, modular pipelines, and cloud-native components that grow with your business. When your data volume grows 10×, your platform keeps pace – without reengineering.

Faster decision-making

Real-time data pipelines and dashboards give you instant insights — so you act faster, not after the fact. Decisions that once took days now happen in minutes, based on live metrics, not static reports.

Integrated Intelligence

Predictive analytics, anomaly detection, segmentation – embedded directly into your workflows for smarter operations. You move from reactive reporting to proactive action with ML models tuned to your real-world data.

End-to-end visibility

From raw data ingestion to polished dashboards, you see the full picture – and control every layer of your data landscape. Executives, analysts, and operators work from a shared source of truth, reducing silos and missed signals.

Why choose SumatoSoft for Big Data development

Deep expertise

Our team has 14+ years of experience in providing software development services with 350+ projects delivered. With 70% senior engineers on board, we’ve solved complex Big Data challenges across fintech, healthcare, IoT, adtech, and more. We leverage this know-how to build the optimal solution for you.

Quality & security

We never compromise on quality. Senior developers and our CTO rigorously review code for every project to ensure clean, efficient architecture. Combined with thorough QA testing, this guarantees your Big Data system is stable, secure, and scalable from day one.

Transparent collaboration

Enjoy full project visibility and control. We plan clear milestones and provide detailed weekly or sprint reports so you always know progress and next steps. You can track development in real-time (via Jira/Confluence) and we’re always available via meetings or chats. No surprises, no hidden costs – you’re a part of the process.

Proven track record

Our Clients’ results and feedback speak volumes. For example, we delivered a custom Big Data platform for an advertising company that ran 50× faster than their previous solution. Our commitment to Client success has earned us a 98% satisfaction rate and top ratings (5.0/5 on GoodFirms, 4.8/5 on Clutch). When you work with SumatoSoft, you partner with a team trusted by startups and enterprises worldwide.

Our recent works

See Real Big Data Projects in Action

Explore how we’ve helped companies turn massive datasets into measurable impact.

Our Big Data software development process

We build our Big Data development process around one goal: generating business value from the data. Our process is fast, transparent, and designed to deliver measurable outcomes at every stage.

We start by aligning on business goals, pain points, and the role data should play in decision-making. Through technical audits and stakeholder interviews, we uncover system gaps, inefficiencies, and integration challenges. You get a mapped data flow, high-level system concept, and a roadmap that connects your data to ROI.

You get: clear data strategy, tech audit results, mapped risks, and solution outline.

We design a modular, scalable architecture based on your use case: real-time vs batch, structured vs unstructured, low-latency vs archival. We pick the right stack – Kafka, Spark, S3, Redshift, Airflow, or Snowflake – with cost and performance optimization in mind. Every component is chosen for a reason and designed to scale without bloat.

You get: architecture diagram, component justification, and infrastructure blueprint.

We build ingestion pipelines, transform raw inputs, and consolidate your data into a single source of truth. Cleansing, validation, deduplication, and governance are baked into every stage, ensuring quality and compliance. Whether it’s APIs, sensors, CRMs, or logs – your data flows reliably and cleanly.

You get: real-time or batch pipelines, clean datasets, and governed storage (lake or warehouse).

We create the logic layer behind metrics, KPIs, dimensions, and filters – the foundation of insights. BI tools are integrated with user roles, caching, and dynamic query optimization. The result: real-time visibility without performance drops or analyst bottlenecks.

You get: a high-performance BI backend connected to Tableau, Looker, or Power BI.

We build dashboards that work – clear, responsive, and role-based. Visuals are optimized for decision-making, whether you’re a C-level executive or an operations analyst. If needed, we go beyond standard tools and deliver custom UIs built on D3.js or React.

You get: production-ready dashboards tailored for real-world usage and user types.

We simulate real-world usage: concurrent queries, late-arriving data, malformed payloads, and system downtime. Every part of the system is verified against business logic and edge cases. Failures are caught early, logs are structured, and alerting is in place.

You get: a stable, tested, and monitored Big Data platform – production-grade from day one.

We deploy everything via CI/CD pipelines and set up monitoring (e.g., Grafana, Sentry, Prometheus) for transparency and uptime. We support post-launch iterations, train your team, and transfer full system ownership. If needed, we stay on to scale, optimize, and expand.

You get: a fully operational platform, team enablement, and optional long-term support.

Rewards & Recognitions

Let’s start

If you have any questions, email us info@sumatosoft.com

Frequently asked questions

What are the services of Big Data?

Big Data services include data storage, processing, analytics, visualization, and consulting to help businesses gain insights from large datasets.

What are examples of Big Data sources?

Examples include social media, IoT devices, weblogs, customer transactions, and sensor data, which generate large volumes of data in various formats.

What does a Big Data developer do?

Big Data developers provide services that are relevant for developing Big Data solutions. These services are: system design, development services, data processing, analysis setup, and much more. To do so, they develop ETL/ELT pipelines, work with distributed storage (like HDFS or Amazon S3), configure real-time data processing (e.g., using Apache Kafka and Spark), and integrate with BI tools for reporting. Service providers aim to transform raw, unstructured data into reliable, accessible, and actionable insights.

What is a Big Data company?

A Big Data software development company usually refers to professional service providers that help other companies benefit from Big Data technology. These companies specialize in handling high-volume, high-velocity, and high-variety data in different industries – from finance to healthcare to logistics.

For example, SumatoSoft is a Big Data development company that develops Big Data platforms that allow businesses to thrive on their business data and use it as a competitive advantage.

Is Big Data a high-paying job?

Yes, Big Data roles are among the highest-paying in tech due to their complexity, scarcity of talent, and direct business value. Developers, engineers, architects, and data scientists working with distributed systems, cloud pipelines, and real-time analytics often command salaries well above industry averages. This is especially true in regulated or data-intensive sectors like finance, healthcare, and e-commerce.